Helping an AP History Education Tool Increase User Retention

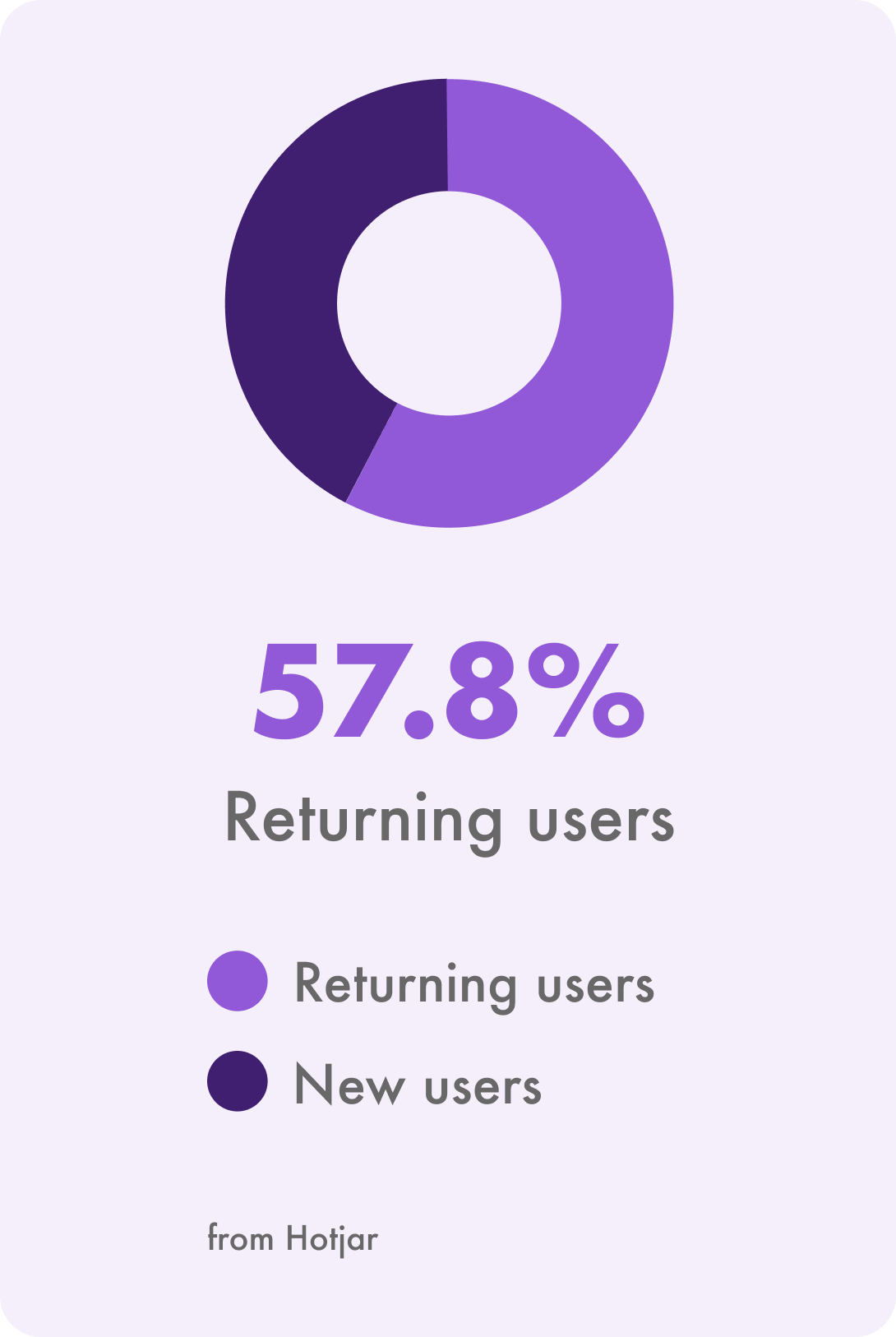

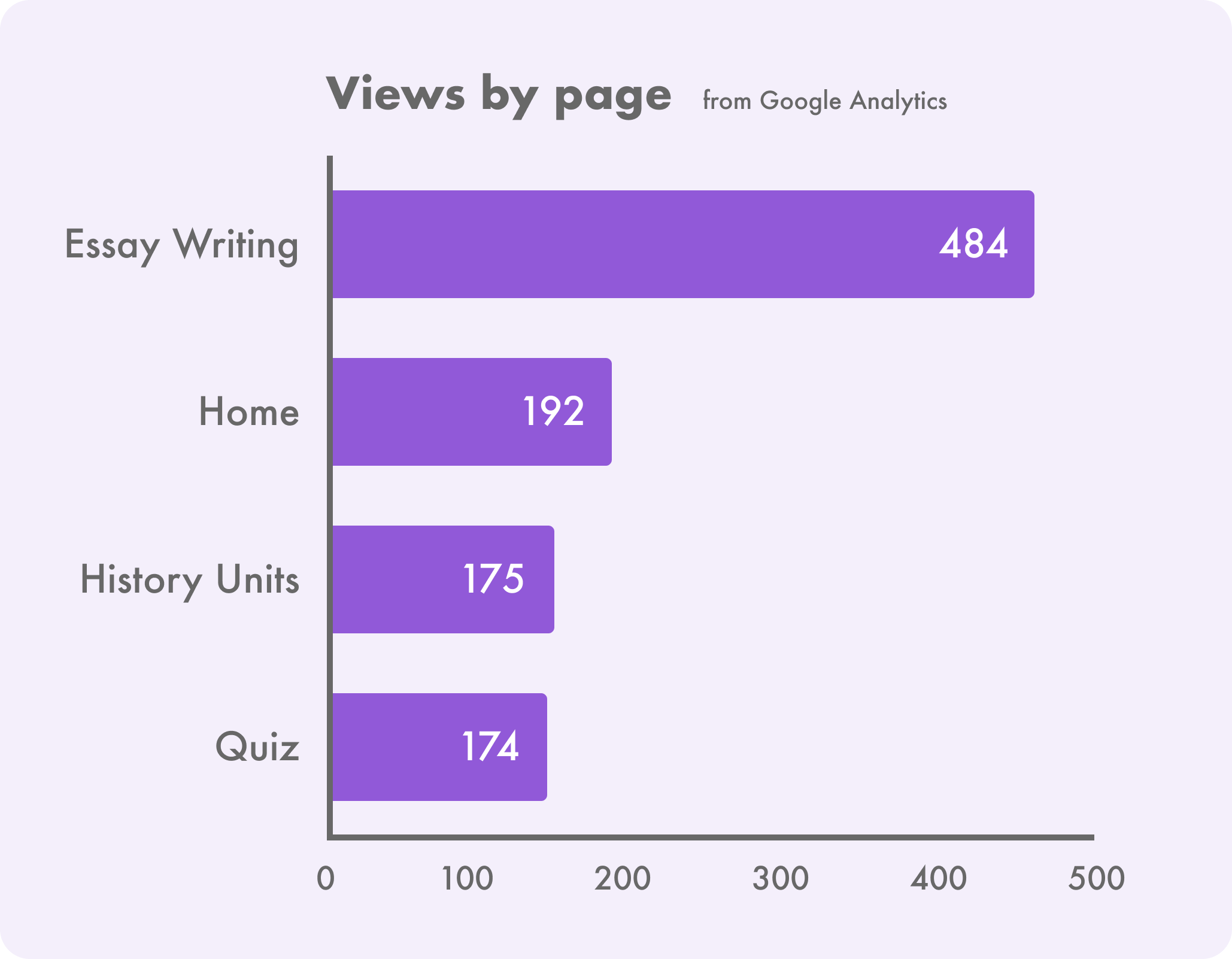

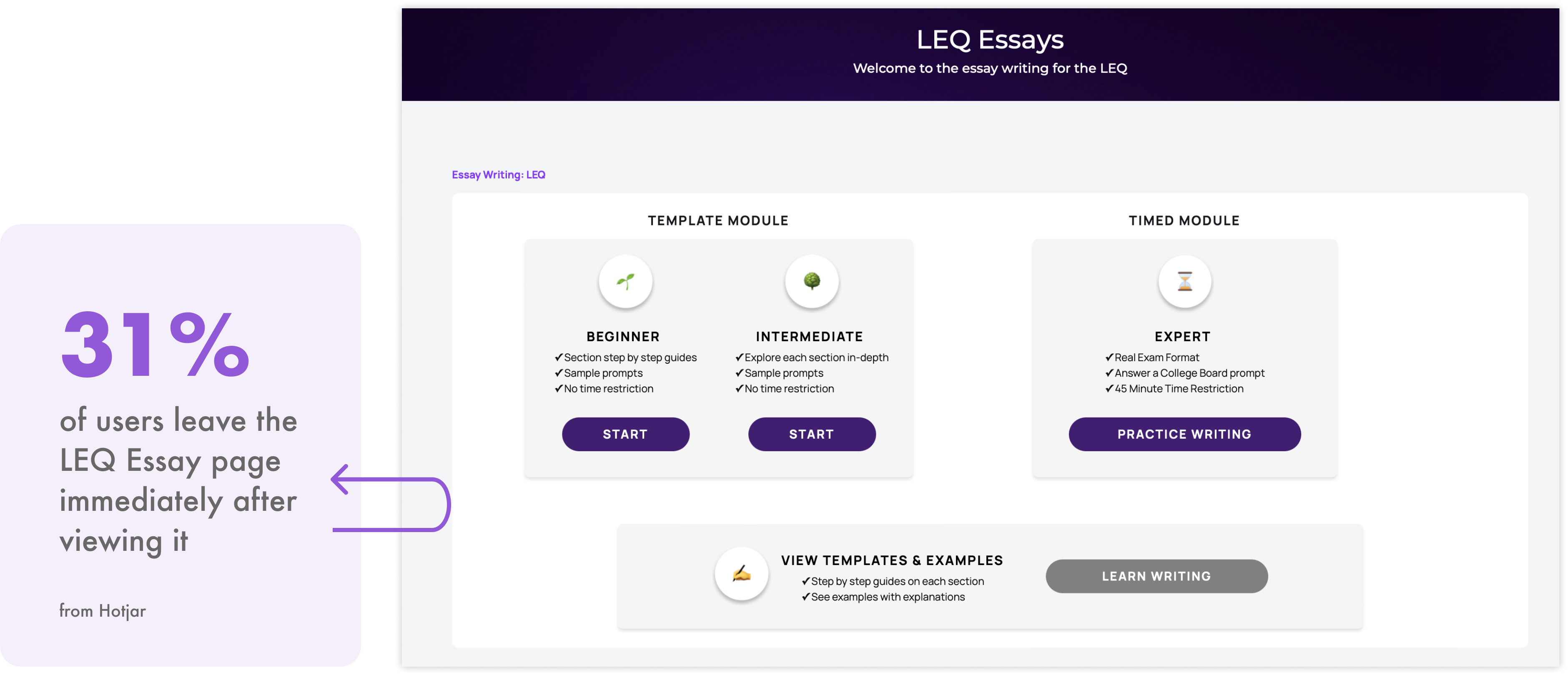

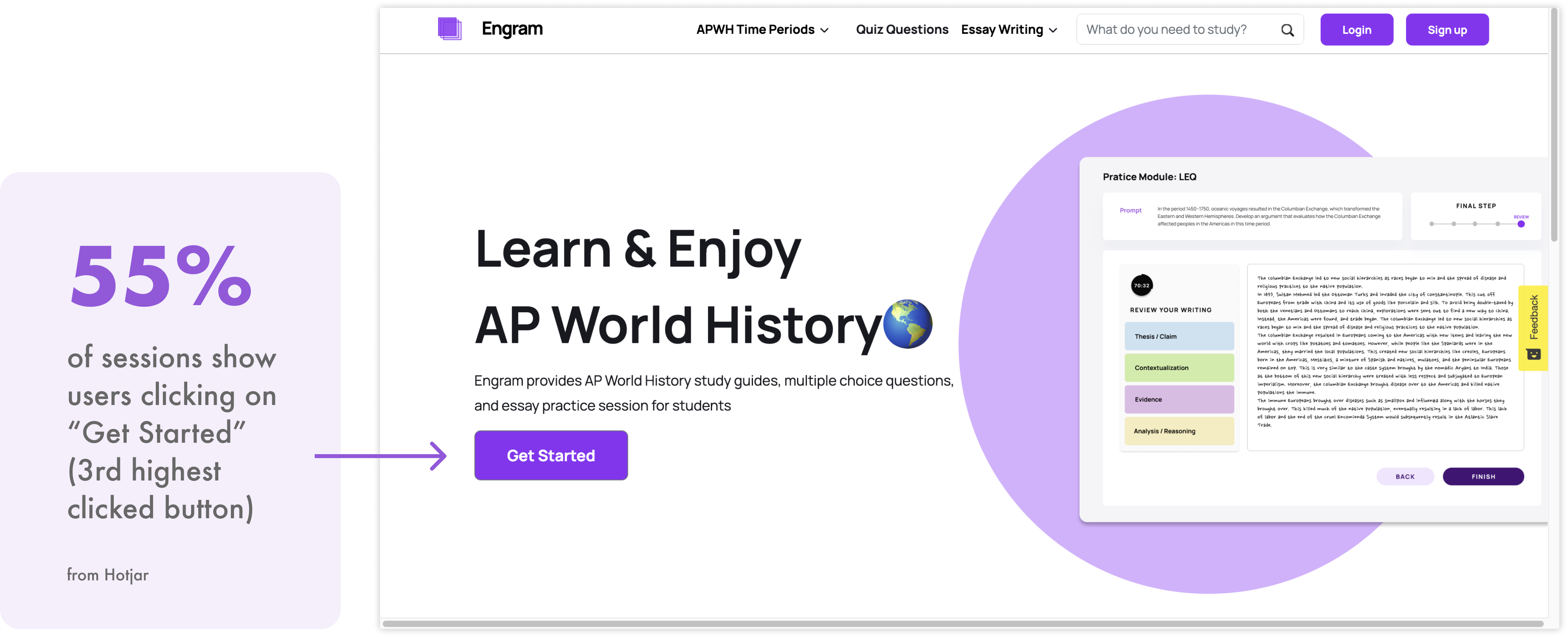

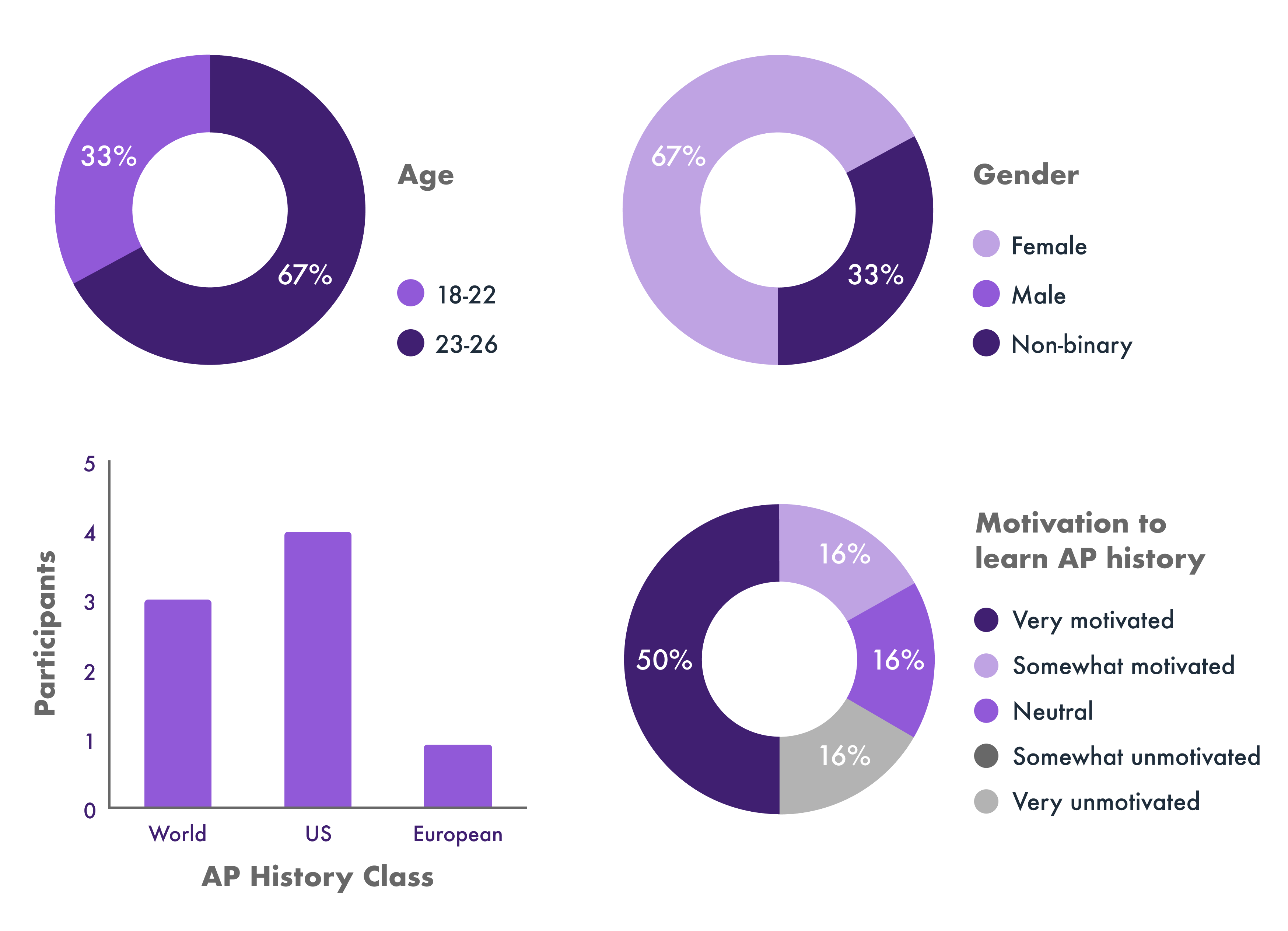

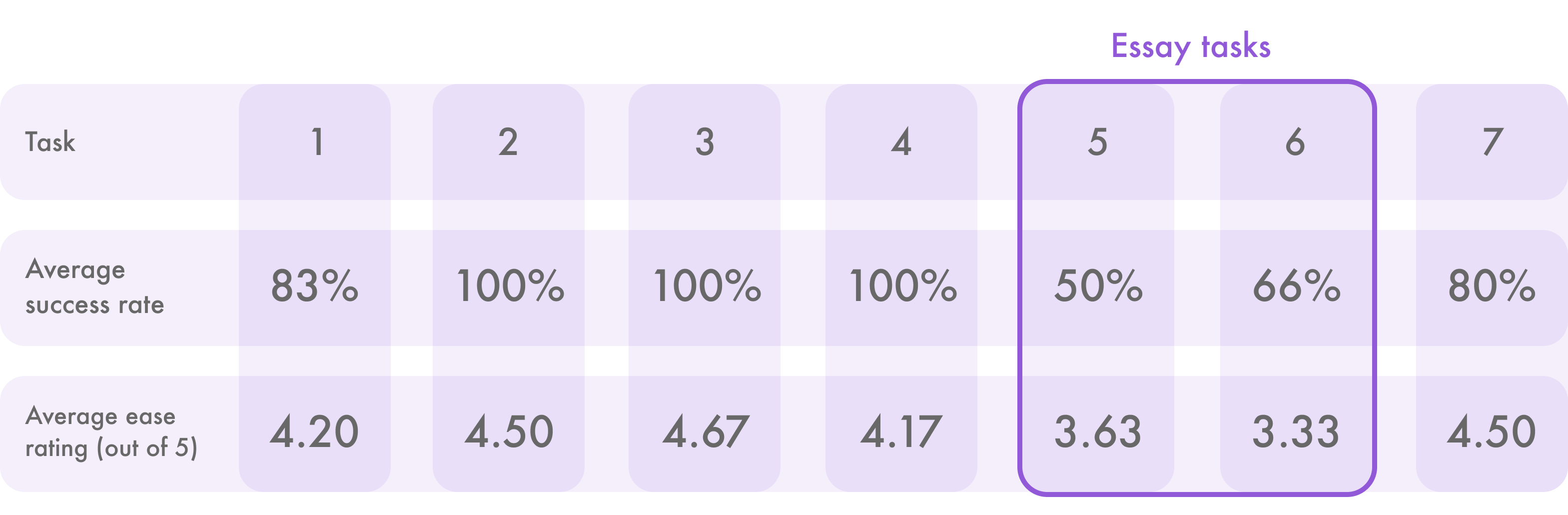

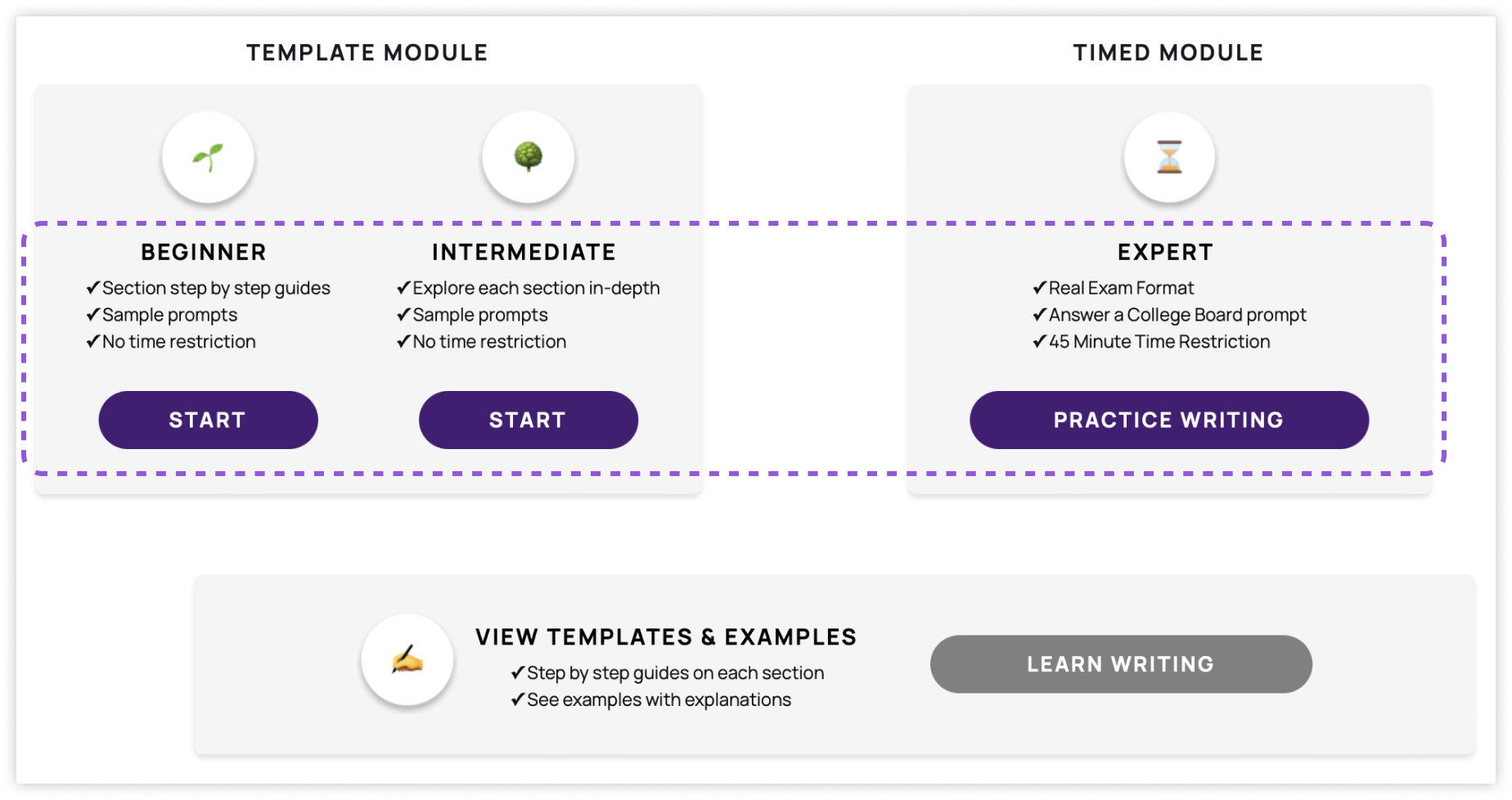

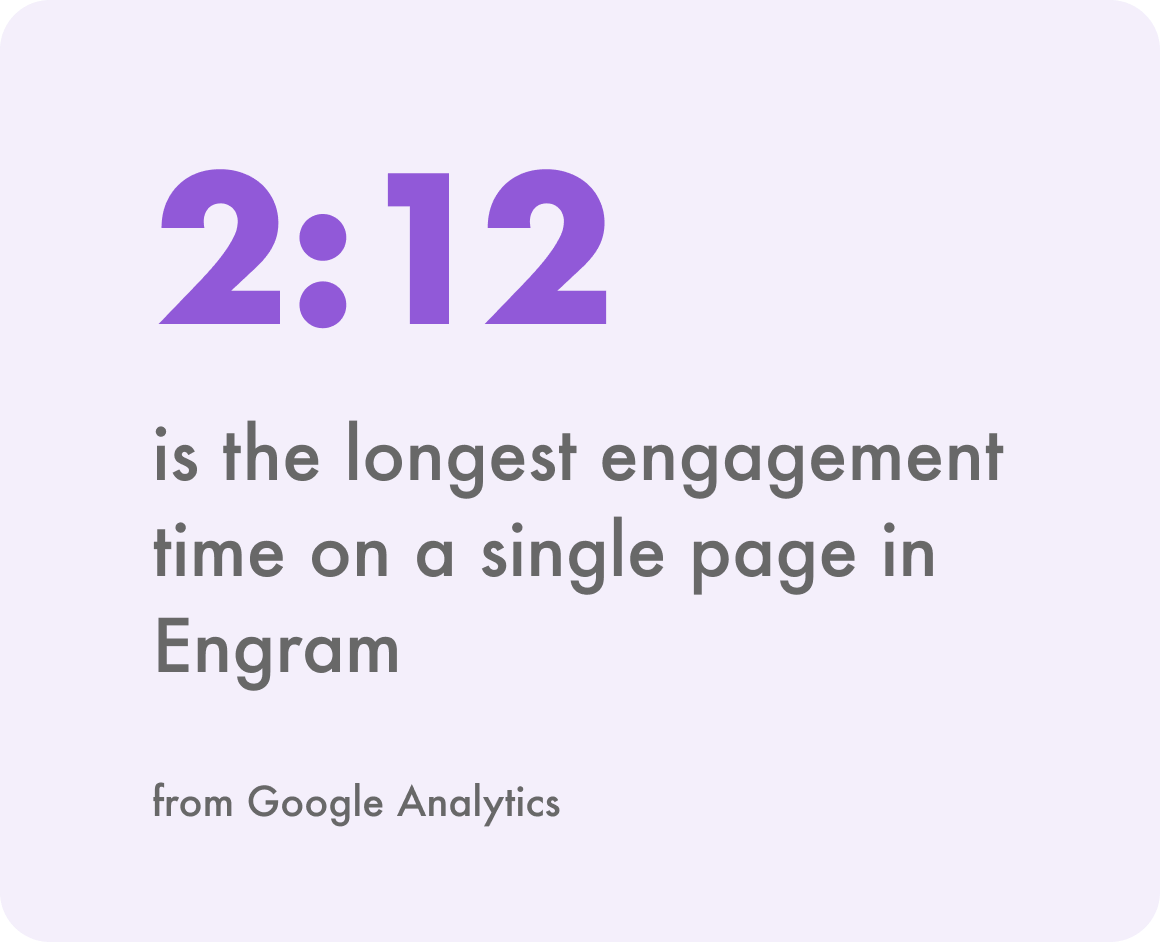

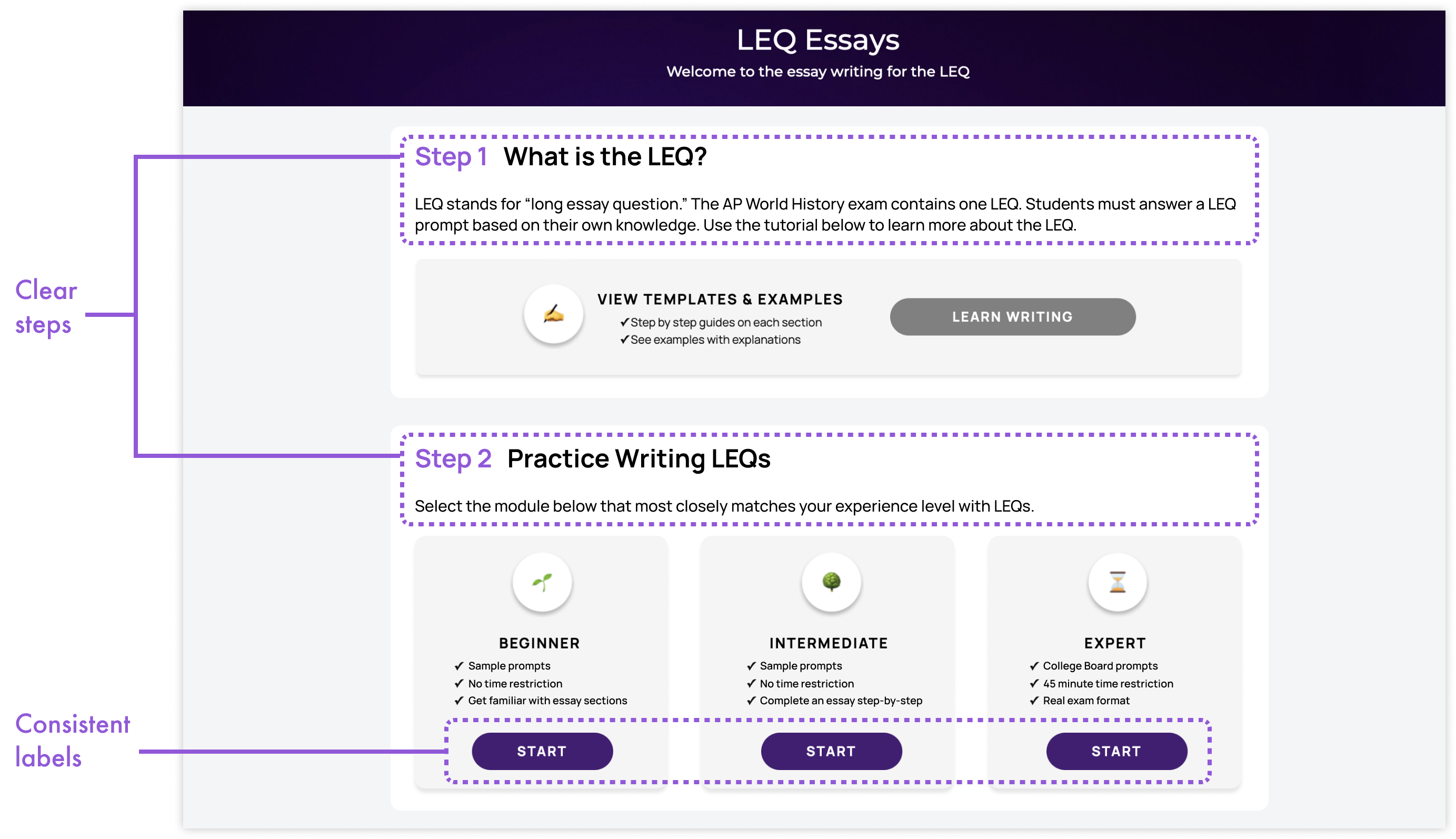

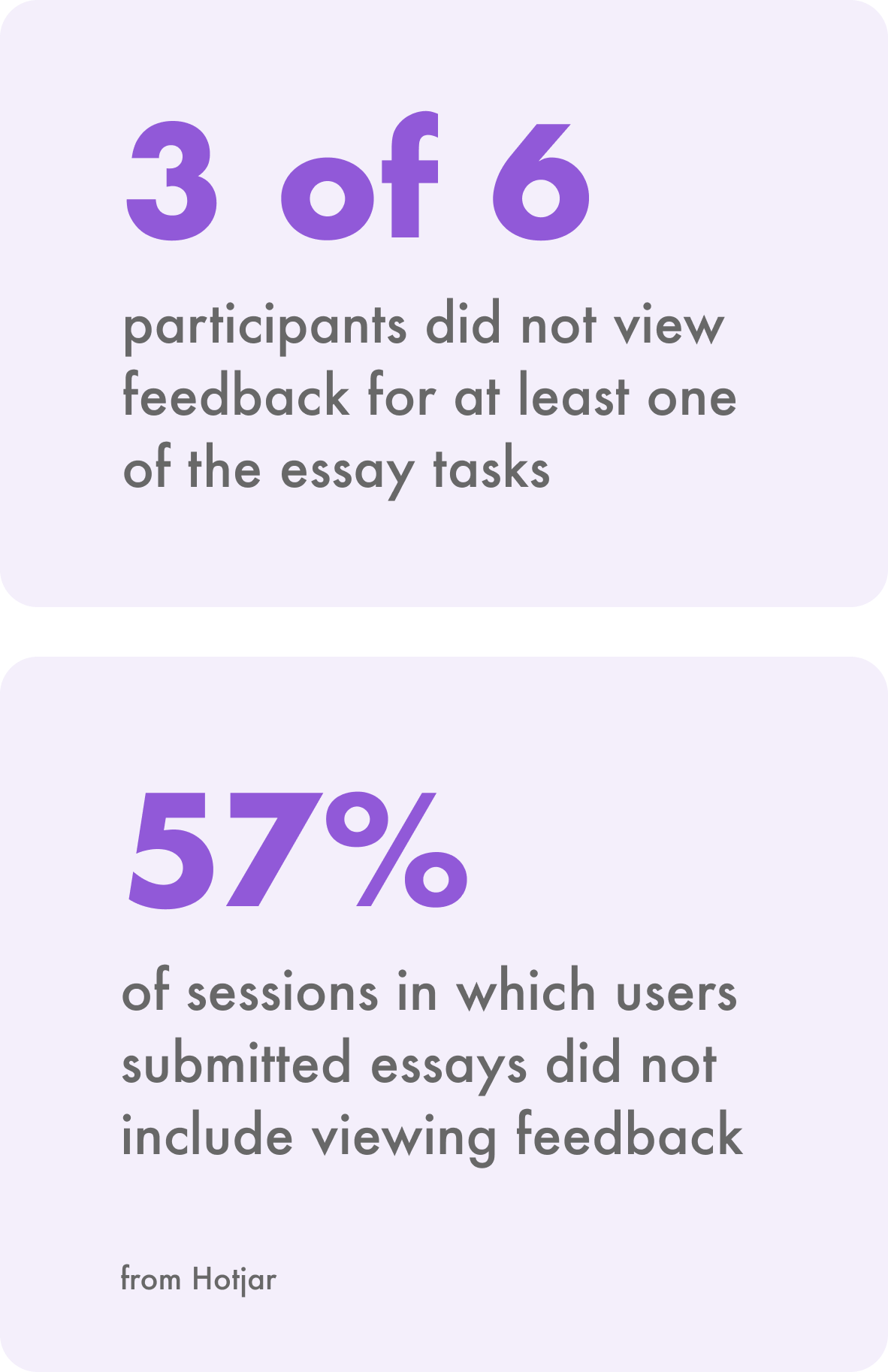

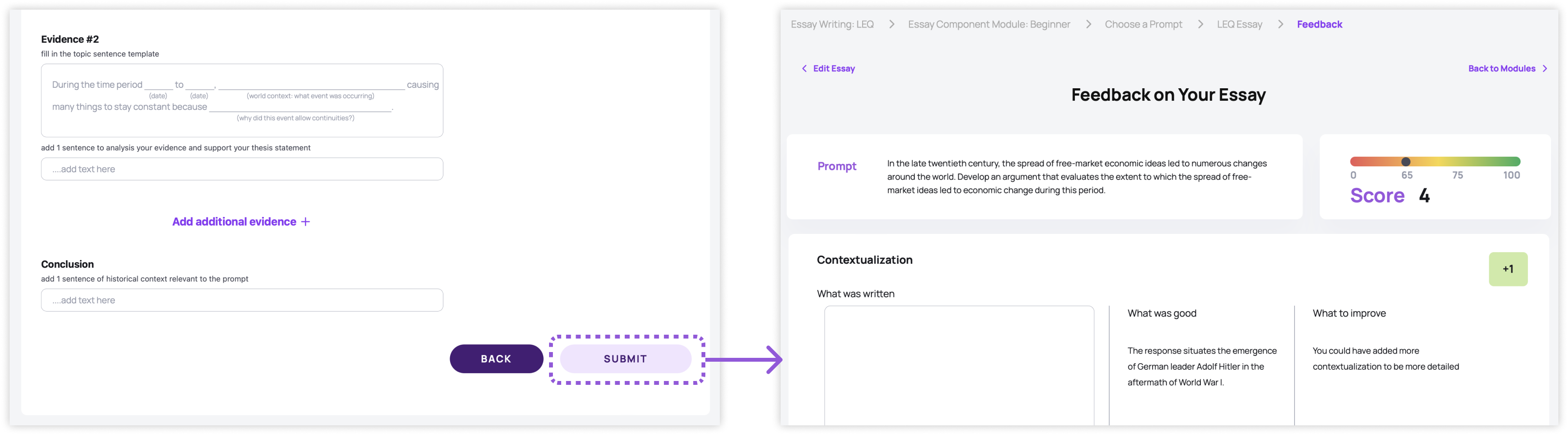

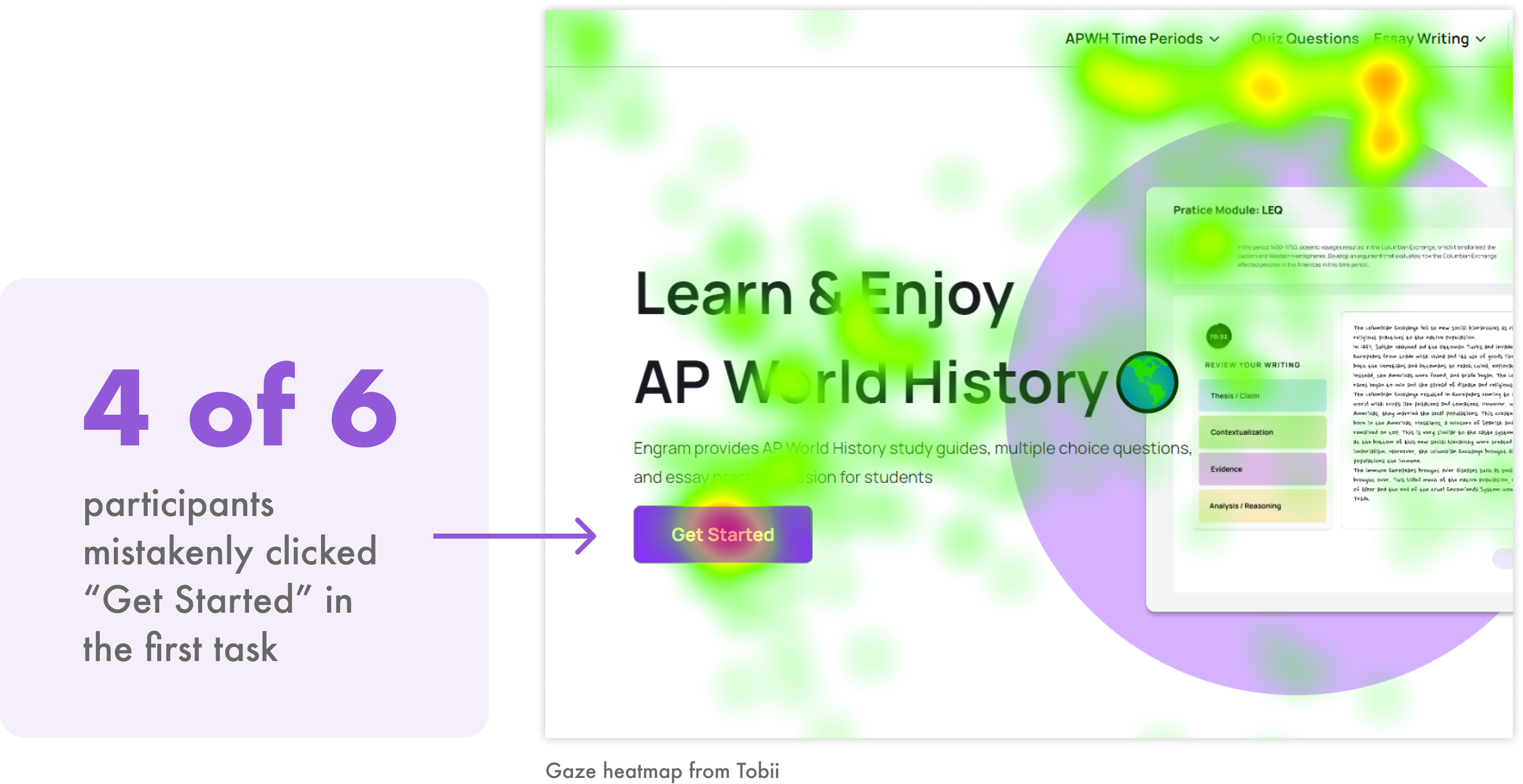

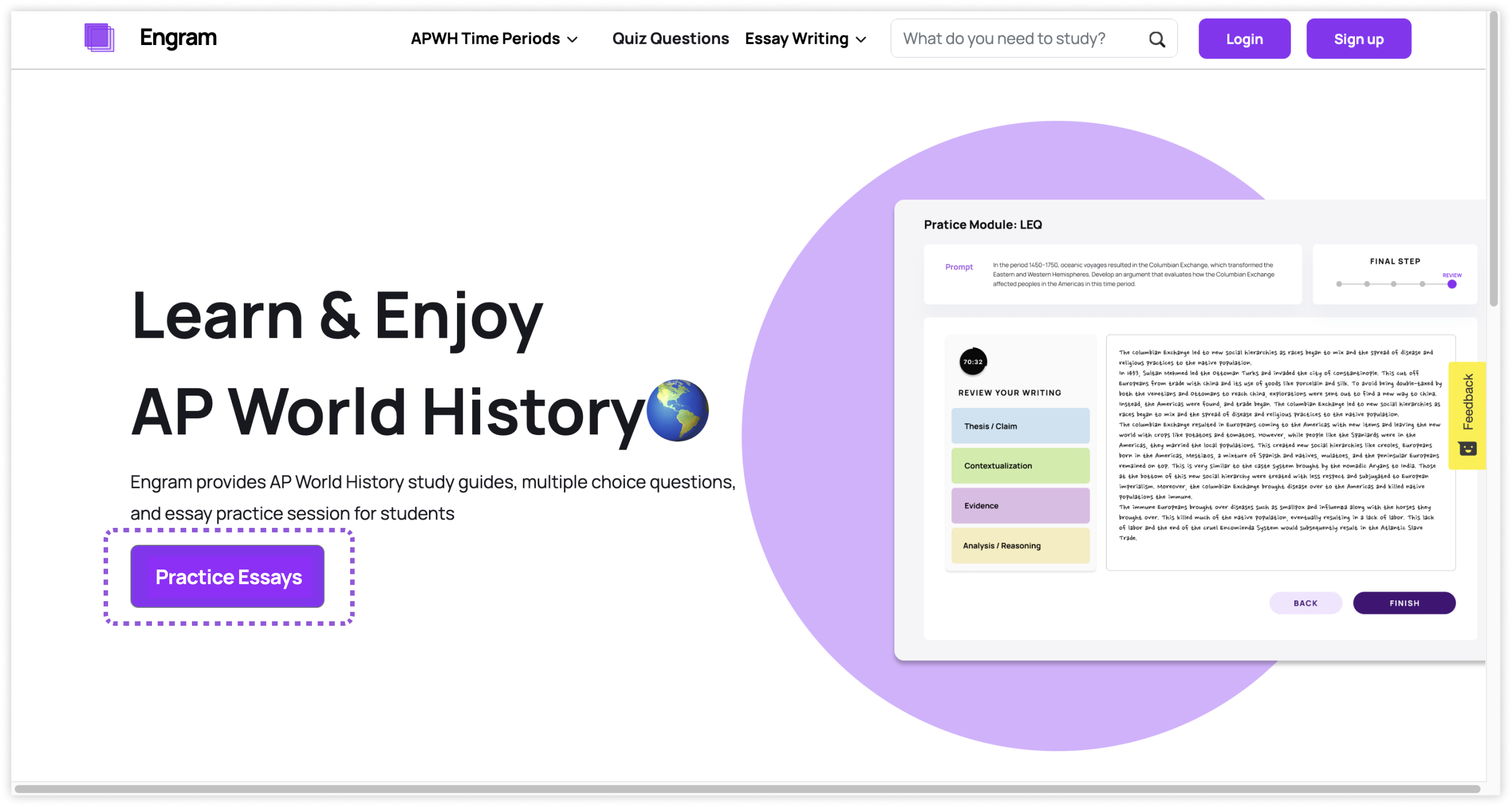

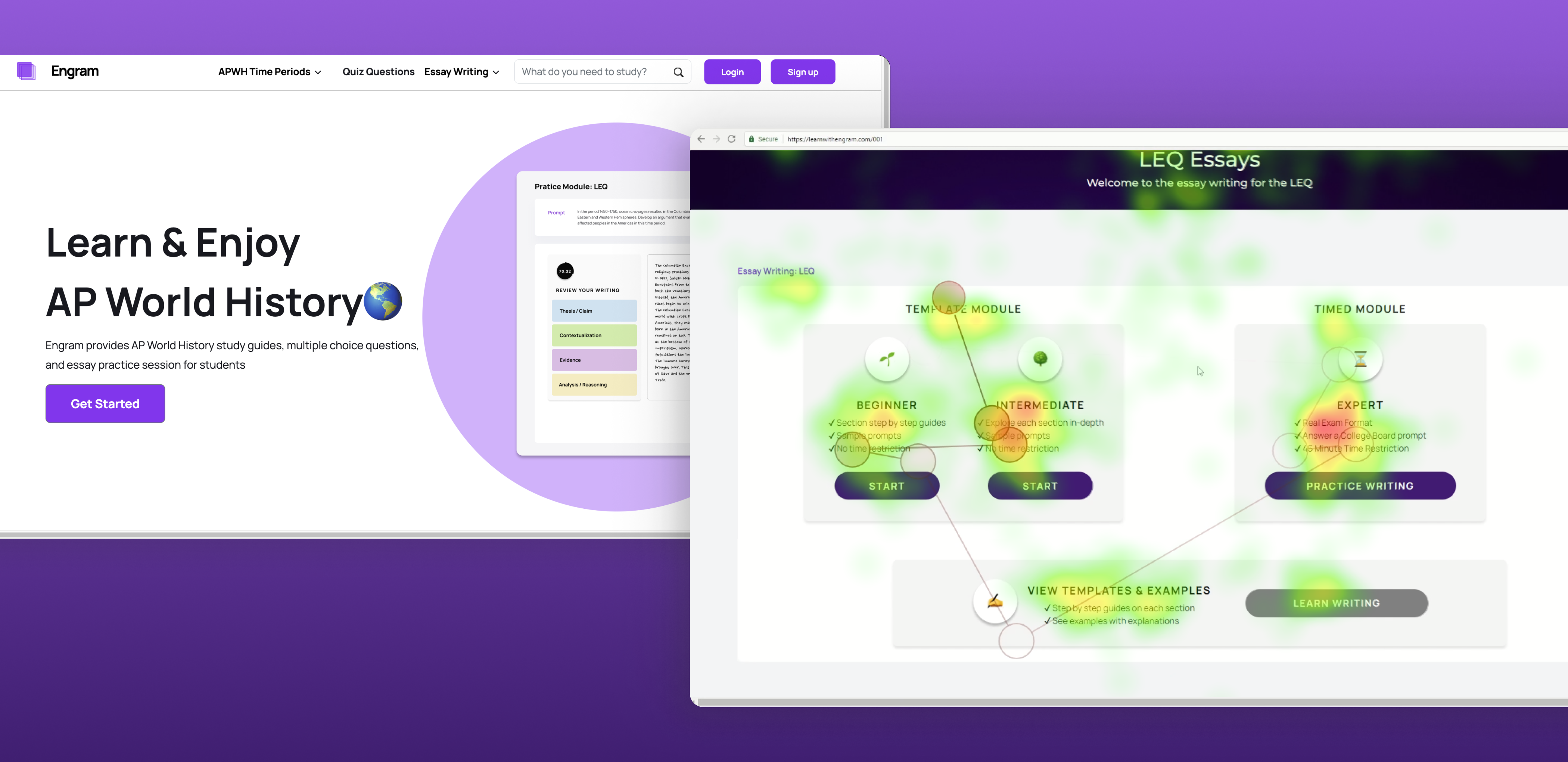

Engram is an AP World History education website that provides informative content, detailed quizzes, and thorough practice essays with feedback. Aiming to provide an engaging experience, Engram was in need of usability research to help increase user retention. This study investigated engagement with Engram using moderated usability testing with eye-tracking, and behavioral analytics data. The website was found to have efficient navigation and effective content overall, but we proposed improvements to the clarity of its call-to-action and guidance for its essay-writing features.

When: September - December 2023

Team: Mary Haws (me), Priyanka Jain, Becky Su

My Role: planning research, writing screening questions, recruiting participants, conducting pilot tests, moderating eye-tracking sessions, analyzing behavioral data, coding qualitative data, creating mockups, communicating findings